Table of Content

Worldwide industries are changing due to the quick integration of artificial intelligence (AI) into business operations. The use of AI technology by businesses to boost productivity, enhance customer experiences, and spur innovation has made ethical issues critical. Investors must know these ethical considerations to protect themselves from hazards and take advantage of long-term, sustainable development prospects. This article examines the moral implications of AI in business and offers investors a framework for negotiating this complex and dynamic environment.

Ethical Issues

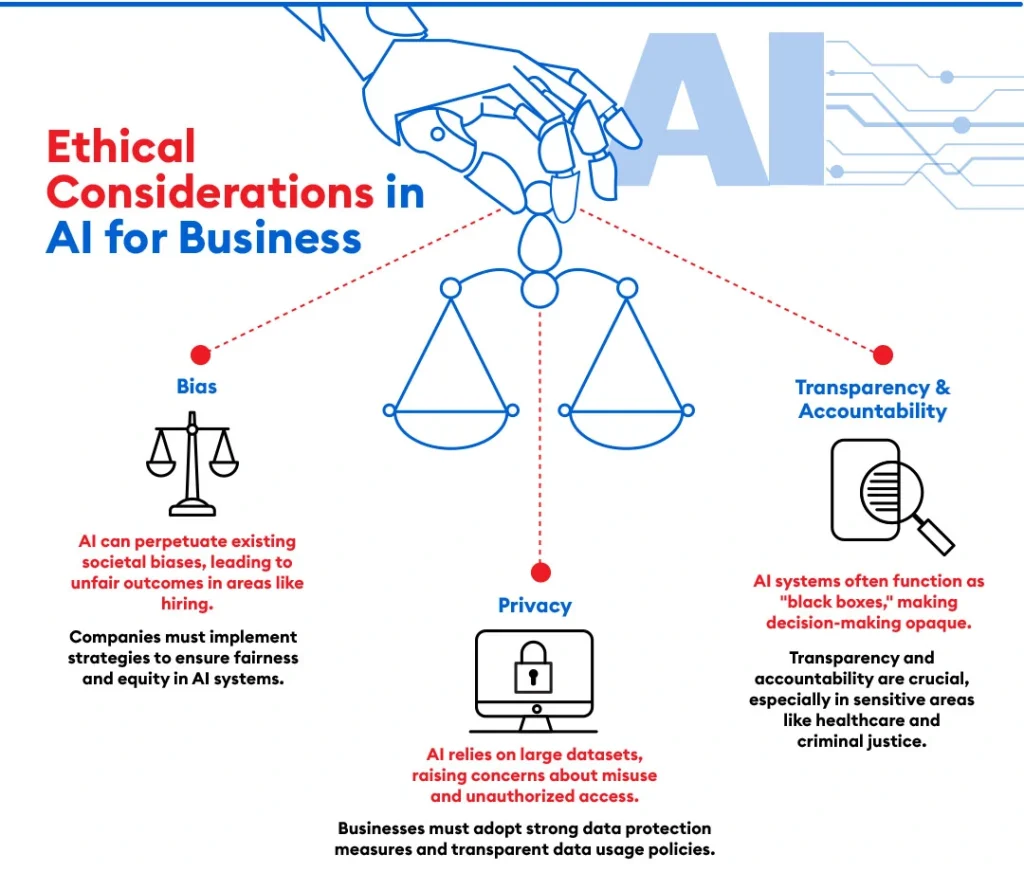

AI’s transformative potential comes with critical ethical concerns, including bias, privacy risks, and a lack of transparency. Addressing these issues is essential to ensure AI systems are fair, respectful of privacy, and accountable in their decision-making.

1. Bias

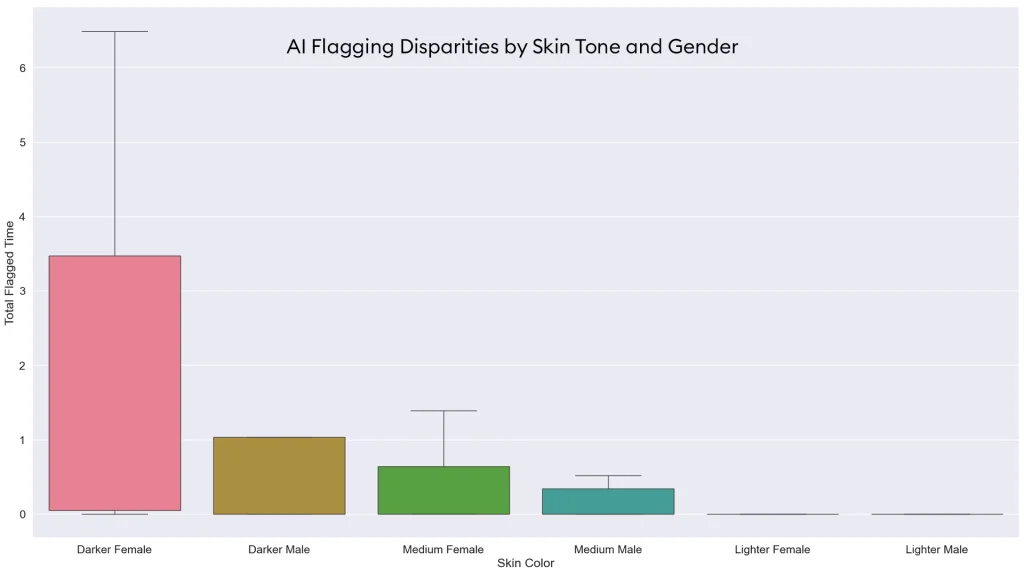

Bias and discrimination in AI are significant concerns. The creation and application of AI algorithms and machine learning models are human-driven processes, influenced by both conscious and unconscious biases. These biases can be embedded in the data sets used for training AI, which often come from the internet and other sources reflecting existing societal prejudices.

AI systems trained on historical data may unintentionally reproduce existing biases, leading to unfair outcomes. For instance, an AI-based hiring tool could favor specific demographics if the training data reflects past hiring prejudices. Such was the case for Amazon, who scrapped an AI-based recruiting tool that showed bias against women. 1

To prevent these biases from infiltrating AI systems, it is essential to involve interdisciplinary teams and conduct thorough testing during development. Without these precautions, machine learning algorithms may inadvertently perpetuate biases, reinforcing age, gender, race, and disability inequalities. Investors must evaluate how companies manage these biases and implement strategies to ensure that their AI systems are fair and equitable.

Privacy concerns are a major ethical issue, especially as businesses gather and analyze large volumes of personal data. The risk of misuse or unauthorized access to sensitive information poses serious threats to both consumers and companies. Businesses that implement strong data protection measures and transparent data usage policies are more likely to earn consumer trust and regulatory approval, making them appealing to investors.

2. Privacy

For AI systems to train machine learning algorithms, extensive data sets are required. This data, sourced from social media, mobile devices, and other technologies, fuels services like search engines, recommendation systems, and voice assistants. AI can create detailed profiles and analytics by tracking user interactions, such as clicks, views, posts, searches, and likes. These profiles can lead to significant privacy issues if the data is sold or used without user consent.

AI’s ability to detect patterns and predict characteristics from data means it can infer personal information that individuals may not wish to disclose. For example, AI used in recruitment might deduce an applicant’s mental health, political views, or likelihood of taking parental leave based on the information provided. Such deductions could influence hiring decisions, raising concerns about privacy violations.

Moreover, AI’s integration with other technologies can amplify privacy risks. For instance, while CCTV cameras in public places are generally accepted for enhancing security, their combination with AI facial recognition software could lead to extensive surveillance, which many would find excessively intrusive.

These concerns highlight the importance of ensuring that AI systems respect individuals’ privacy and use personal data responsibly.

3. Transparency and Accountability

Transparency and accountability in AI decision-making are critical issues. AI systems often function as “black boxes,” making it challenging to understand how specific decisions are made 2

This opacity can create accountability problems, particularly in sensitive areas like healthcare or criminal justice, where AI decisions can have serious repercussions. Investors should evaluate whether companies are committed to enhancing the transparency of their AI systems and ensuring accountability for their outcomes.

A major ethical concern is AI’s potential to manipulate and deceive. AI can exacerbate social divisions, enable political repression, and spread misinformation if used unethically. Individuals’ digital footprints contain vast amounts of personal data, revealing their preferences, political views, financial status, and more. While businesses can use this data to personalize services and target advertising, it can also be exploited to create manipulative tools that exploit individuals’ vulnerabilities, influencing their decisions and potentially undermining their autonomy.

Moreover, AI can generate factually incorrect content that is distributed alongside legitimate news. This can mislead individuals on crucial issues, affecting decisions related to voting, vaccinations, trust, and more. These AI-generated videos, known as “deepfakes,” can falsely depict public figures, distorting reality and spreading falsehoods. For instance, a Chinese government misinformation campaign used AI-generated videos with fake news anchors delivering false reports to disseminate state-sponsored propaganda. One such video features an AI presenter criticizing Taiwan’s outgoing president, Tsai Ing-wen, through metaphors and wordplay on her name 3

This video is part of a broader trend of AI-created deepfake news anchors, which are increasingly being used to spread false information. These digital avatars appear more frequently on social media, especially as the technology becomes more readily available.

Conclusion

As AI technology evolves, the ethical considerations surrounding its use become increasingly important for investors. While AI offers substantial benefits, it also presents challenges related to bias, privacy, and transparency. Investors must scrutinize how companies manage these ethical issues to avoid pitfalls and identify sustainable investment opportunities. Companies that proactively address biases, safeguard privacy, and ensure transparent AI practices will likely gain consumer trust and regulatory approval, positioning themselves as responsible and attractive investment prospects. By prioritizing ethical AI practices, investors can navigate the complexities of AI and leverage its benefits responsibly, shaping the future of business and investment.

Graph Citations:

AI Chatbots and Risk of Spreading Disinformation:

- Cox, A., Doyle, M., & Payne, T. (2023). Exploring the ethical implications of ChatGPT and other AI Chatbots and the regulation of disinformation propagation [Dataset]. Retrieved from Figshare.

AI Flagging Disparities by Skin Tone and Gender:

- Fernandez, A., & Smith, J. (2022). Evaluating bias in facial detection systems used in American educational institutions. Frontiers in Education, 7(881449). Retrieved from Frontiers.

- Ethical Challenges of AI: https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G/[↩]

- Ethical Challenges of AI: https://www.digitalocean.com/resources/article/ai-and-privacy[↩]

- Ethical Challenges of AI: https://www.theguardian.com/technology/article/2024/may/18/how-china-is-using-ai-news-anchors-to-deliver-its-propaganda[↩]